Reinforcement learning

August 25, 2022

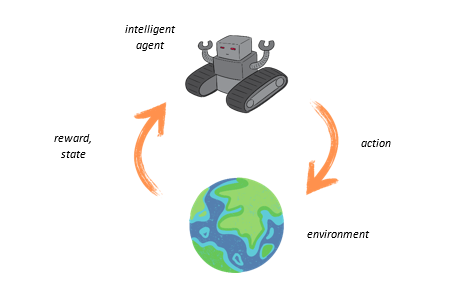

The two most well-known and widespread machine learning paradigms are unsupervised and supervised learning. However, in the last three decades, another paradigm of machine learning called reinforcement learning has attracted significant attention in the research field. What is reinforcement learning? When we think about the nature of learning, the first thing we have in mind is learning from interaction. Whether we are learning to make breakfast, hold a presentation or drive a car, we interact with our environment, get a response from it, and act accordingly. Broadly speaking, reinforcement learning represents a computational approach to learning from interaction [1]. In this learning framework a learner (in reinforcement learning literature, the term intelligent agent is used instead of the term learner) is not told which action to take. Instead, he has to interact with the environment and explore which set of actions yield the most cumulative reward by selecting each of them [1].

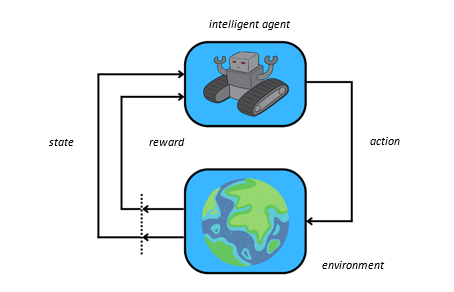

The two main elements of the reinforcement learning are the intelligent agent and the environment.

The aim of an intelligent agent is to find an optimal outcome to the given task by exploring the

environment and exploiting the acquired knowledge. Besides the intelligent agent and the environment, the following elements can be found in the RL nomenclature (Fig. 1):

- policy,

- reward,

- value function and

- model of the environment (optional).

The policy determines the agent’s behavior at a given moment (time step). In other words, policy determines which action the agent should select in a given state.

In order to successfully solve the reinforcement learning problem, it is necessary to quantify the behavior (set of actions) of an intelligent agent, compare several different behaviors generated by the agent during the learning process, as well as to evaluate the favorability of each of the states in which the agent found itself based on a sequence of actions taken. Therefore, the concept of reward was introduced in the theory of reinforcement learning. As noted, the term’s very name suggests a preference for specific behavior.

While the reward is an indicator of what is more favorable behavior in the current time step, the value function determines what is more favorable in terms of solving the overall problem [2]. Roughly speaking, the value function of a given state represents the total expected reward that an intelligent agent can receive in the future, starting from a given state.

Besides the intelligent agent, the environment, and the reward, the additional terms needed to define the Markov decision process (formalism used to describe reinforcement learning problem) are the state and the action (Fig. 2). The state can be defined as a set of components generated as a result of the actions taken and/or as a result of agent-environment interaction. In reinforcement learning, an action is defined as any decision an intelligent agent needs to make that affects the environment [1]. To successfully solve the reinforcement learning problem besides the choosing appropriate technique, one must define state space, action space, and reward function.

Real-World Example

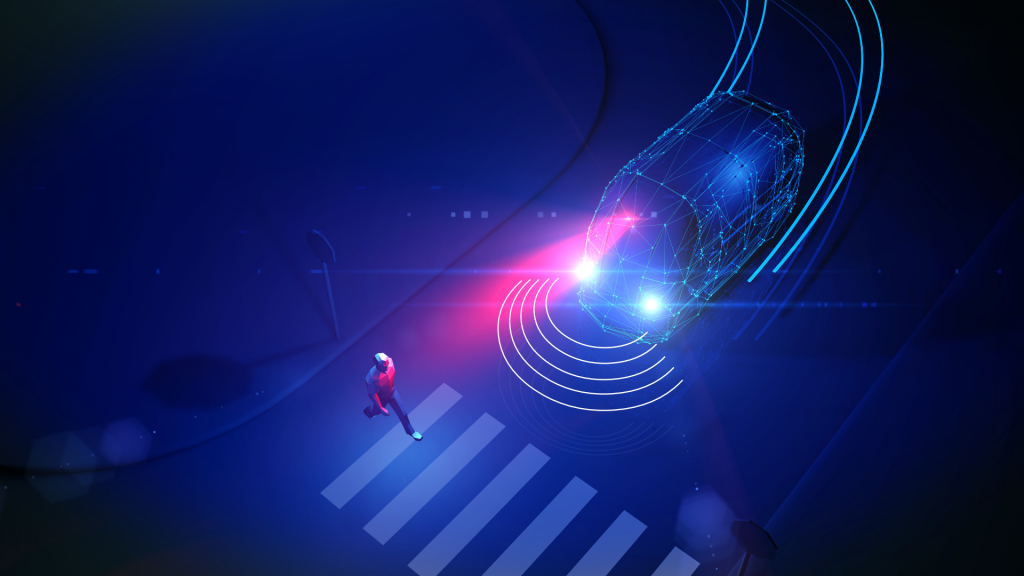

How to create a system that should help the driver in implementing the recommended driving style in real time, with the overall aim of achieving greater energy efficiency of electric buses used in urban transport in Belgrade [3]?

Why can the abovementioned problem be solved by applying reinforcement learning techniques? For the reason that the total result (electrical energy consumed during one driving cycle) represented as a consequence of all the actions taken by the driver from the start pose (initial bus stop) to the final pose (final bus stop) is required.

Another key question is: What makes this task an ideal machine learning problem? The answer is that 100% precision of the system is not required (all those who deal with artificial intelligence problems know that one of the main reasons for the broader application of artificial intelligence techniques is the impossibility of achieving 100% precision). However, such a system, which has the task to suggest driver which action he should take at every time step rather than operate autonomously, is undoubtedly the most suitable solution.

What are the additional advantages of these systems? The nature of reinforcement learning algorithms is based on adapting the behavior of an intelligent agent in dynamic environment (Fig. 3), that is, learning the policy based on its own experience. In this context, imagine that these systems can also learn by following the driver’s commands and his reactions to some new unforeseen circumstances. In this way, the system can continually improve its performance. In addition, another advantage is that such a system could be applied to other vehicles, using an almost identical principle. And finally, as we know in advance, the system needs to be developed for real-world application in exploitation (the known route of driving); therefore, implementing the concept mentioned above does not require any laboratory tests.

What will be the action space, state space and reward function for this problem? Since the total electrical energy consumed during the one driving cycle is the parameter we should measure, the reward function is known from the problem requirements. Furthermore, state space can be defined as a monocular camera image in conjunction with the measured bus speed [4]. Actions can be defined as the percentage level on the throttle and brake pedal. This is considered reasonable since the output of the system should be something like “press more or less throttle or brake pedal”. However, it is noted that if we want from the system to be fully autonomous, these two commands can be reparametrized in terms of speed set-point [4].

References:

- Sutton, R. S., & Barto, A. G. 1998. Introduction to reinforcement learning.

- Silver, D. Introduction to Reinforcement Learning with David Silver. Deep Mind. https://www.deepmind.com/learning-resources/introduction-to-reinforcement-learning-with-david-silver.

- https://rs.n1info.com/biznis/tender-za-primenu-sistema-koji-pomaze-vozacima-elektricnih-autobusa/, Accessed on August 25th, 2022.

- Kendall, A., Hawke, J., Janz, D., Mazur, P., Reda, D., Allen, J. M., … & Shah, A. 2019, May. Learning to drive in a day. In 2019 International Conference on Robotics and Automation (ICRA) (pp. 8248-8254). IEEE.